Sora’s capabilities are nothing short of magical. The examples OpenAI provided under the capabilities section of the demo page are stunning - vignettes which are astoundingly complex, and hauntingly life-like.

I’m just as enthralled with the prospect of this technology as you are.

There is no doubt in my mind that OpenAI’s Sora will be the definitive advantage, which marketers of every discipline will leverage to catalyze the efficacy of their branding, audience acquisition, and content development initiatives in the months to come. While we have limited information about Sora’s capabilities, the current demo site for this precedent setting product is one of the most exhilarating technologies i’ve ever seen. The prospect of what OpenAI’s Sora brings to the world is nothing short of revolutionary, in that nearly anyone will be able to create vivid animations, photorealistic video content, and who knows what else.

Sora’s capabilities are nothing short of magical. The examples OpenAI provided under the capabilities section of the website are stunning – vignettes which are astoundingly complex, and hauntingly life-like.

The video of the “Tokyo Walk” (which is downloadable) feels cinematic and certainly alludes to how this technology could be utilized to create travel videos with a distinctive cultural flair:

…and the historical “fly by” of a California “frontier” town during the Gold Rush? It feels more like a carefully curated drone shot of an expansive movie set which was spared no expense in creating an essential timepiece backdrop for a modernized western film:

…and let’s not dismiss how outrageously realistic the image of this adorable dog hopping from window to window appears. For that matter, consider the physics in this shot – the modeling that Sora had to leverage in order to create something like this, let alone in astonishingly vivid detail:

According to OpenAi’s demo site, Sora is a “diffusion model”…

“…which generates a video by starting off with one that looks like static noise and gradually transforms it by removing the noise over many steps.

Sora is capable of generating entire videos all at once or extending generated videos to make them longer. By giving the model foresight of many frames at a time, we’ve solved a challenging problem of making sure a subject stays the same even when it goes out of view temporarily.

Similar to GPT models, Sora uses a transformer architecture, unlocking superior scaling performance.”

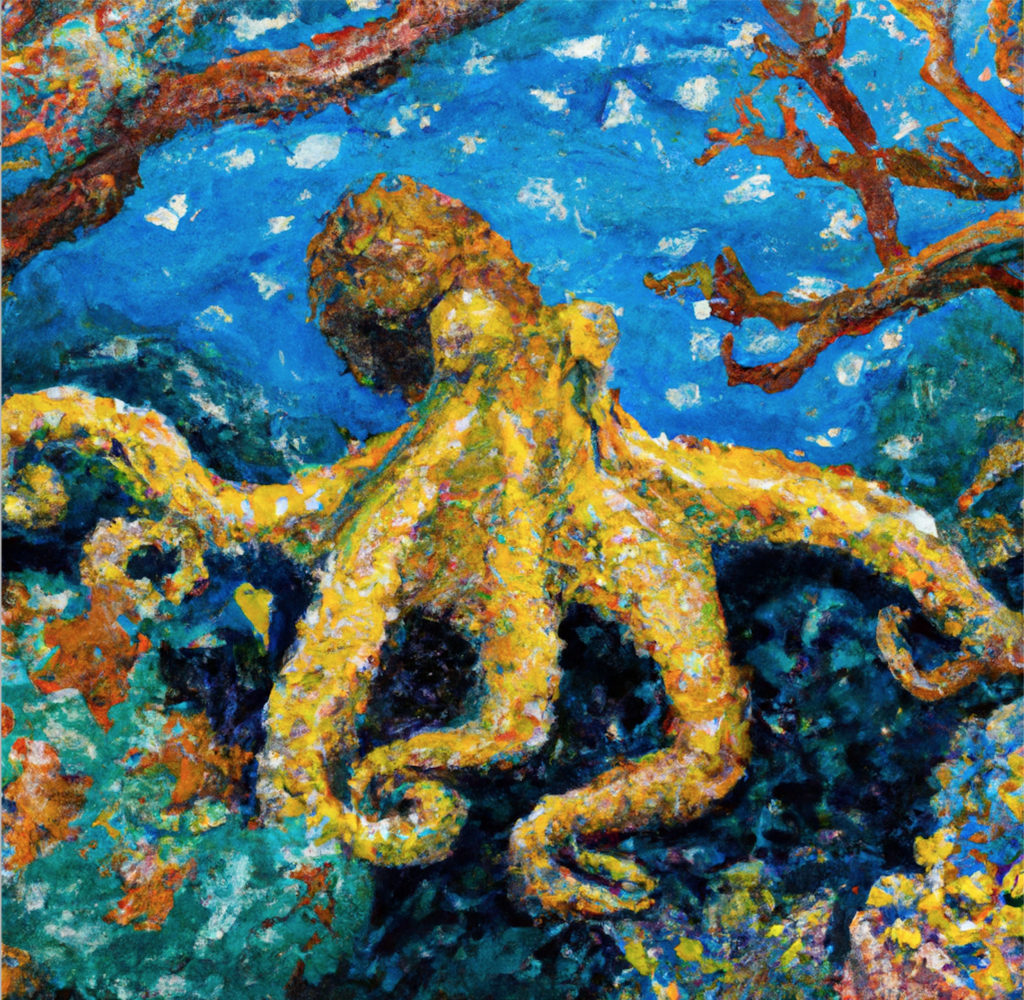

OpenAI notes that Sora builds on the research collected after the deployment of DALLE, which is by far my favorite AI image generator. DALLE responds to complex prompts, creating visuals which are captivating and immeasurably fascinating. Here’s an example of a “Van Gogh” style octopus I created using a fairly simple prompt:

Will OpenAI Sora transform video editing as we know it?

Video production has, for most of my life, fascinated me.

I’m self taught in video editing, like most of my generation – having started off with iMovie, splicing together vast volumes of shaky 1080p footage from my Hero2 (circa 2011).

Since those early days of creating iMovie projects, I graduated to FinalCut Pro and never looked back. No matter how much I practice video editing, it remains an inordinately tedious task. And that is aside from actually going out and shooting the videos in the first place. Most of the content I create on my YouTube channel is “adventure” and “travel” themed – and let me be fully transparent – it has cost me tens of thousands of dollars visiting exotic places and filming them. Let’s not even get into the context of how much time i’ve spent editing the 60 videos i’ve shared publicly.

What i’m getting at here, is that OpenAI Sora could very well be the revolutionary technology which enables us to produce content without actually having to visit those places. The applications are limitless. Consider the ability to produce animated tutorial videos of a complex process (i.e. surgical guides, construction techniques, advanced culinary endeavors). Or the opportunity to create aerial landscape drone videos of exotic places – past, present, future, or fantastical. This technology will have applications far beyond anything I could possibly envision as I sit here contemplating the inordinate versatility of what OpenAI Sora might be able to do.

What is the OpenAI Sora Release Date?

That is exactly what everyone wants to know. OpenAI is clear in their direction about the release date of Sora, which they published in their community forum (visit here to see the discussion thread) : there is no scheduled release date at this time (2/16/24). The technology is being tested by a limited team of thought leaders in the AI industry – in order to ensure the product is “safe” for the world to utilize.

There are countless applications of “text to video” technology which could be used to create all manner of manipulative, deceitful, and outright dangerous media. OpenAI has maintained a well documented history of being “careful” about the application of their products, being sure to create restrictions and limitations which prevent their technologies from being utilized for nefarious purposes. I’d love to think that creative applications of this technology would take center stage…but i’m certainly not naive…and if it’s one thing i’ve learned about humanity since the pandemic…it’s that people are…brutal.

If I were to make a prediction, I estimate that Sora will be available to the general public (perhaps only to paid subscribers) by the third quarter of 2024. The technology is clearly close to being ready for public use, and OpenAI is masterful when it comes to building “hype” around their products. As the media frenzy ensues, and the discussion around OpenAI Sora continues to unfold, I suspect there will be a variety of influencers, journalists, and technology evangelists who will be showcasing what this enthralling product can do.

Can you see an Open AI Sora demo?

Absolutely you can. OpenAI makes it easy for you to see what Sora can do on the demo page for this product, which is located here:

It is currently a landing page which showcases several applications of the technology, all of which are absolutely mesmerizing.

OpenAI Sora Pricing: Too good to be true?

While there is no current indication from OpenAI of what the pricing model for Sora will be, I would imagine it will be similar to what’s currently available for ChatGPT. I predict that a subscription to Sora could be anywhere from $50/month to $500/month (with enterprise applications or subscriptions available for more advanced features).

The concept of this being available as a subscription is extremely attractive to me, as a marketer, and an individual interested in video production. I consider the current pricing model for the paid version of ChatGPT to be the deal of a lifetime. I subscribed to ChatGPT as soon as it was available, and I use it nearly every day of my life. As a marketer and entrepreneur, I consider ChatGPT an indispensable asset. I have no doubt that OpenAI Sora will be immeasurably important as a marketing tool in the years to come.

OpenAI Sora : How to Use It Effectively, Practically, and Professionally

That is another question which is on everyone’s mind. I anticipate that OpenAI Sora will be very easy to use once it is made publicly accessible. It will certainly require the input of a thoughtful prompt – the more detailed the better – because that’s the premises for all AI technologies which are publicly available. AI Prompt Engineering, as an academic discipline, is becoming extraordinarily “in demand” as more and more marketing, advertising, and editorial professionals develop a reliance on tools like Gemini and ChatGPT to create various media resources.

I suspect that proficiency in using OpenAI Sora will depend heavily on your capacity to think critically, creatively, and to communicate effectively. The more nuanced and detailed your prompts are, the more dynamic and intricate the products will be.

When it comes to using OpenAI tools effectively, I would imagine that Sora will be able to interpret various “signals” from the intent of the prompt itself. That requires a prompt to explicitly, clearly, and creatively distinguish the elements that OpenAI Sora should factor when generating the video content. Much like using DALLE, the more detailed you are with citing specific elements of the visual product you desire, the closer to the tangible produce you can get.

In a professional context, I see OpenAI Sora as an opportunity to create compelling brand videos which showcase various aspects of a product, service, or culture without having to shoot any video, color correct that video, or tailor it (though i’m sure you will be able to bring the file into an editor and adapt it as you see fit). Professionally, OpenAI Sora could be used to create a repository of video assets which showcase a brand’s products or services in a practical application – this could be a game changer for creating demonstrations of products or services in various scenarios, without having to go through the process of coordinating an expansive video shoot!

As mentioned on the OpenAI Sora demo page:

“Sora serves as a foundation for models that can understand and simulate the real world, a capability we believe will be an important milestone for achieving AGI.”

…that is a major indication that OpenAI Sora is just the beginning of what’s coming. It’s the “foundation” for future models that can simulate the real world. That is a startling concept, and I for one, can’t wait to see what’s around the corner.